LLM Application Engineering: model selection & hot-swapping; parameter tuning (temperature/Top-p); context & token budget management; function calling & tool orchestration.

Knowledge Base Engineering: data cleaning & structuring; embeddings & indexing; retrieval-augmented generation (RAG) with consistency validation.

Conversation System Design: multi-turn state management; branching dialogue modeling; intent/slot design; checkpointing & recovery.

Backend & APIs: FastAPI contract design; schema validation; rate limiting & basic authentication.

Database & Persistence: chat trace data modeling; query & index optimization; backup/restore & access control.

Containerization & Release: Docker/Compose proficiency; environment & dependency isolation; health checks & basic orchestration.

CI/CD & Quality Assurance: pipeline setup; automated testing; canary releases & rollback strategies.

Observability & Operations: structured logging; metrics & alerting; slow-query tracing & performance tuning.

Cloud & Networking Fundamentals: load balancing; TLS/HTTPS hardening; secure secrets & configuration management.

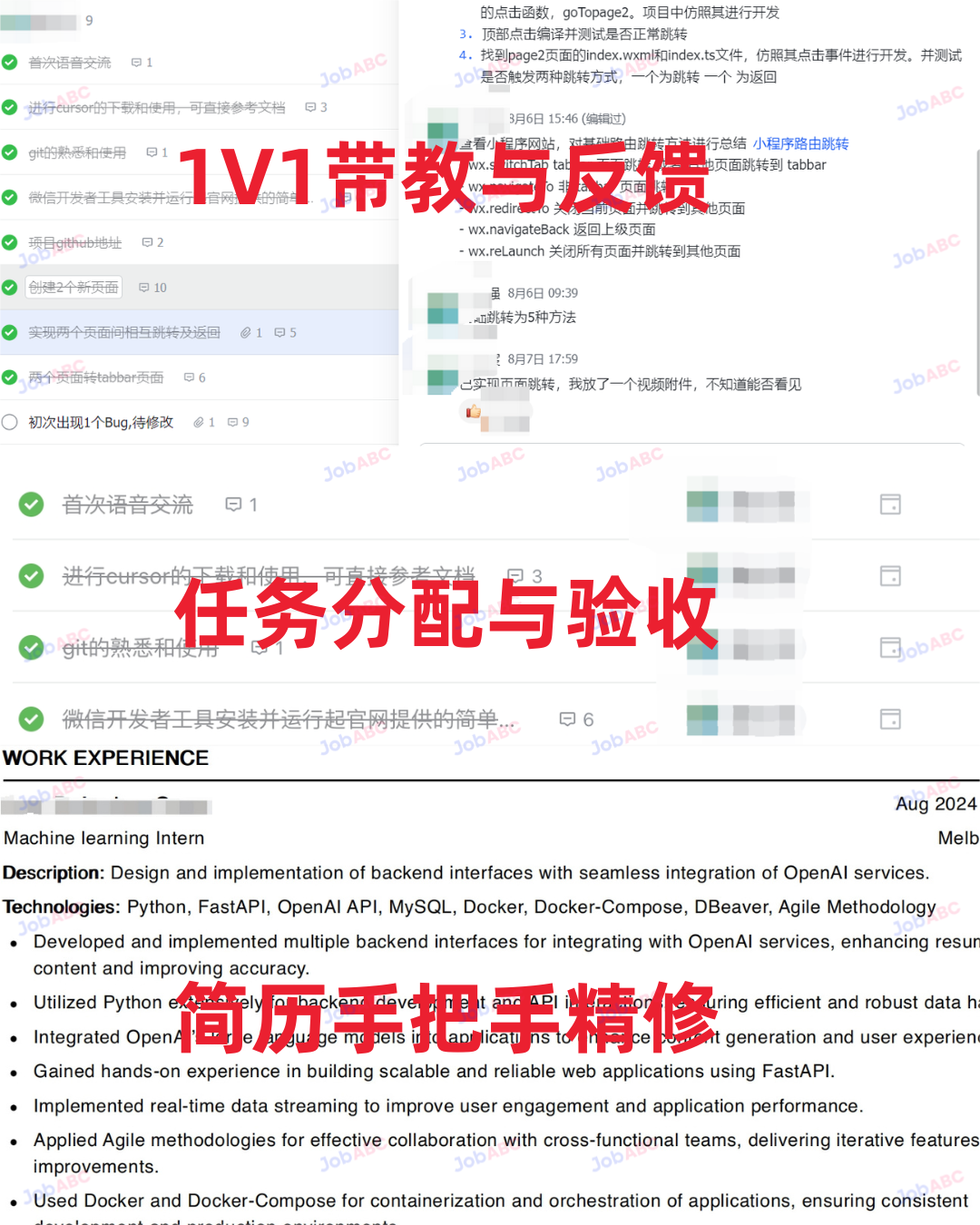

Product Collaboration & Engineering Docs: requirements clarification; task decomposition; milestone cadence; reproducible documentation.

Requirement Introduction

1. AI-based automatic generation of corporate HR split welcome messages

The system utilizes AI technology to automatically generate and send friendly and professional welcome messages for the opening of job seeker interactions, enhancing the job seeker experience and guiding them to the next step of interaction.

2. Building a data storage cluster based on Docker containerization technology

Build and configure a MySQL data storage cluster with Docker containerization technology to support the subsequent chat history persistence function. This includes choosing the appropriate MySQL version, implementing cluster deployment using container orchestration tools such as Docker Compose or Kubernetes, and performing basic configuration such as user rights management, database creation, and cluster monitoring.

3. Linear and non-linear persistence of chat history

Design and implement the persistent storage function of chat history, supporting the storage of conversation content in both chronological (linear) and branch jumping (non-linear) ways, to ensure that the conversation history can be safely preserved and easy to query and review.

4. Enterprise private knowledge base construction and management

Build and manage an enterprise private knowledge base to support the knowledge acquisition and answering functions of HR Intelligent Alter ego. The knowledge base will contain company-specific information such as company culture, job descriptions, hiring processes, FAQs, etc., and can be regularly updated and expanded. This private knowledge base will serve as the underlying data source for intelligent Q&A with big language models to ensure accuracy and consistency of answers.

5. Intelligent Q&A based on OpenAI and other big language models

Enhance Chatbot's intelligent Q&A capabilities with advanced large language models such as OpenAI to ensure that the system is able to analyze and understand job seekers' complex questions and provide accurate and personalized responses.

6. LLM Model Switching

Supports flexible switching between multiple LLM models, so that the most appropriate model can be selected for answering according to different dialog contents.

7. Model Configuration and Tuning

Fine-tune LLM models to make them more adaptable to recruitment scenarios and support dynamic adjustment of different model parameters to improve Q&A accuracy.

8. Multi-Round Dialogue Management

Implement the multi-round conversation management function to ensure that Chatbot can understand and process consecutive conversations, maintain context consistency, and recover to the previous state after a conversation is interrupted.

9. Job seeker information collection

During the dialog with job seekers, the system actively collects key information, such as name, contact information, work experience, skills and so on. With a preset scope of information collection, Chatbot is able to ask relevant questions at the right time and store the collected information in a structured database to support subsequent recruitment decisions and processes.

10. Candidate Information Summary and Email Push

The system automatically outlines and summarizes the collected candidate information and generates a concise candidate information report when the information is deemed complete. The system can automatically send the report to the HR of the organization via email, so that HR can quickly assess the qualifications of the applicant and further improve the recruitment efficiency.

Large modeling techniques and engineering technologies involved in the project

Large Language Modeling (LLM).

The OpenAI GPT Family

Model fine-tuning and tuning techniques

Multilingual Support and Contextual Understanding

Containerization & Cluster Management

Docker Container Technology

Docker Compose

Kubernetes (optional)

Databases & Persistence Technologies

MySQL Databases

Database Clustering and Backup

Persistent Storage and Index Optimization

AI & NLP Technologies: Natural Language Processing (NLP)

Natural Language Processing (NLP)

Text Generation and Intelligent Q&A

Multi-Round Dialogue Management

Information Collection and Processing

Data Structured Storage

Information Aggregation and Report Generation

Automated email push

Continuous Integration and Continuous Deployment (CI/CD)

Jenkins, GitLab CI/CD or other CI/CD tools

Automated test and deployment pipelines

Version control and rollback policies

Operating Systems and Application Environments

Linux operating systems (e.g. Ubuntu, CentOS)

Shell scripting and automation management

System monitoring and log management

Cloud Services & Infrastructure

Cloud service platforms such as AWS, Azure or GCP

Cloud database and storage services

Load balancing and auto-scaling

Web Services Deployment and Management

FastAPI: Used to build high-performance API services that support rapid development and deployment.

Nginx: Used for reverse proxy, load balancing and static resource services to ensure high availability and performance optimization of web services.

HTTP/HTTPS Configuration: Configure secure Web services that support SSL/TLS encryption.

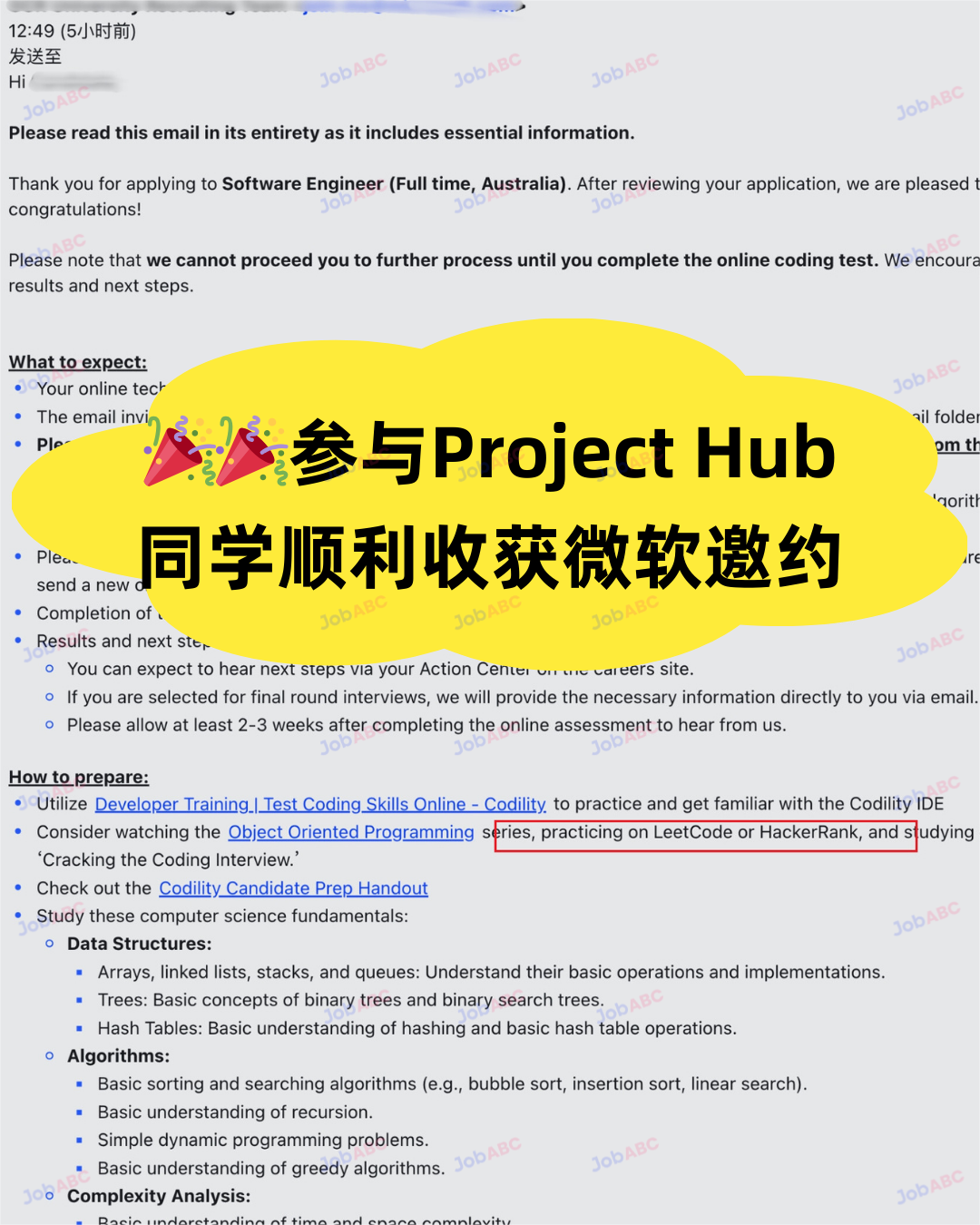

Majors in IT, Computer Science, Artificial Intelligence, or related disciplines.

A technology platform providing innovative solutions across major industries in Australia.

The company has successfully delivered multiple technology innovation projects, including the practical

implementation of Web 3.0 and FinTech concepts and applications.